What have software testing and the Giza pyramid in common? Well more than you might think!! First of all, when a software tester goes through a thinking process many times their perspectives and insights shift from left to right or up or down. Let me ask you this: do you think the Giza pyramid was a tomb for a Pharaoh? If you do think so, I'd suggest you to watch this video first. Especially mind the 6 minutes and 5 seconds moment where the creator of the video states: IF A PIECE OF IT DOESN'T WORK, IT DOESN'T WORK. This aspect is many many times the case when developing software. Give the video a try and see if your mindsets and views are changing during watching.... If it happens, remember this moment the next time you're testing a functionality or module of an software information system...

0 Comments

View on Prezi itself for FULL view... (better)

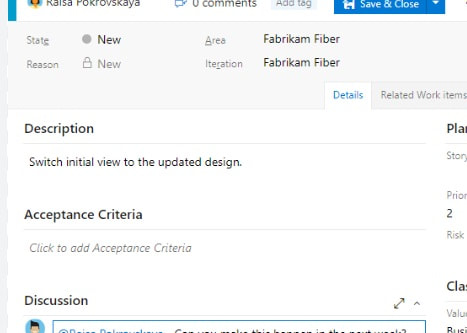

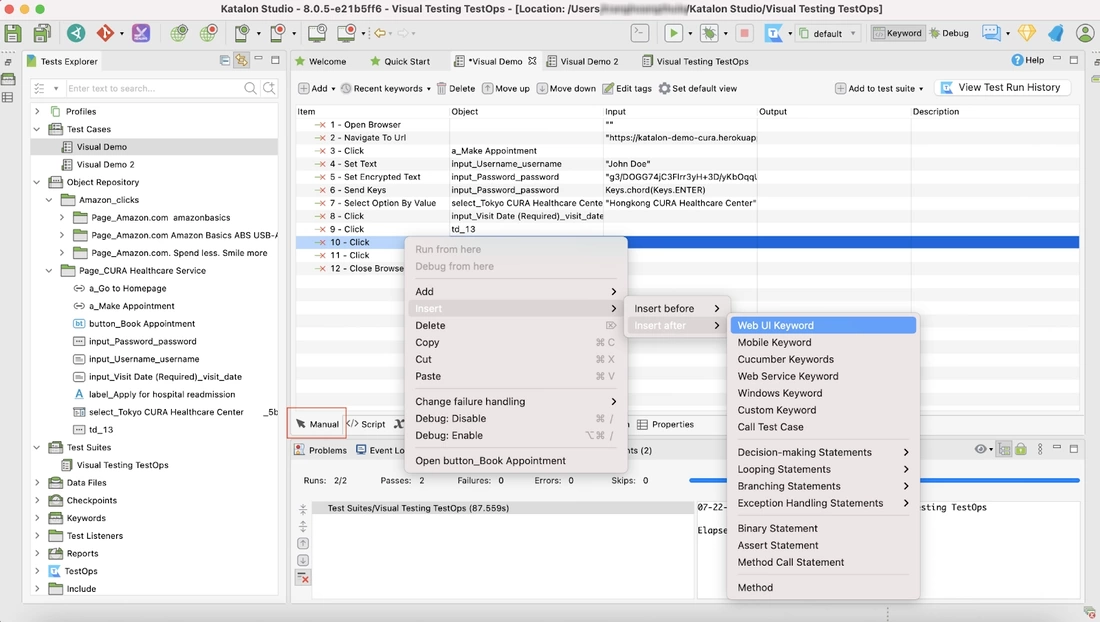

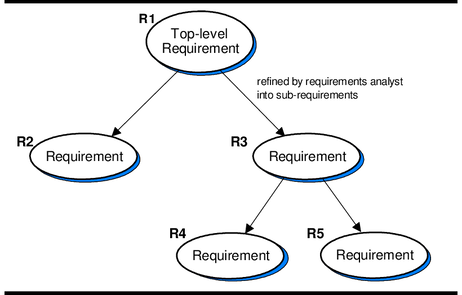

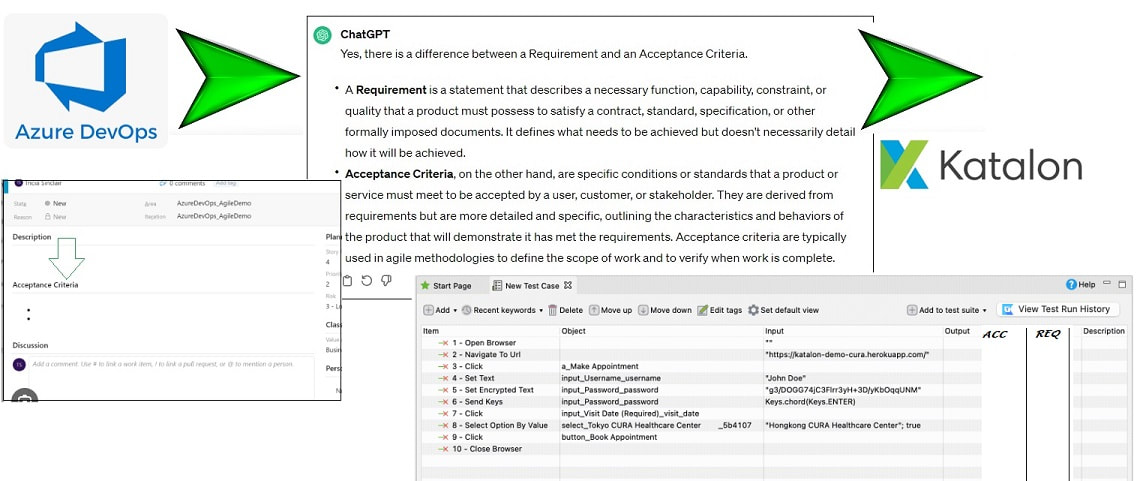

After a hiatus of two months not writing any blogs, I thougth it was high time for a fresh entry on this website. Software testing is a critical (thinking) profession and can sometimes be frustrating. It's challenging to jump in the middle without experiencing the origin and lacking solid background knowledge. One glaring issue that can arise in the process is the lack of clear requirements for an information system, making testing extremely difficult. In recent months, I coined a new term: ACCREQ management. In ACCREQ, 'ACC' stands for Acceptance Criteria and 'REQ' for Requirements. What we often observe in many TEST tools is a lack of proper attention to this aspect. Take two simple examples: Azure DevOps and Katalon Studio. Let's consider Azure DevOps, where we see a textbox for entering Acceptance Criteria. What happens in practice? Acceptance Criteria for the actual software and various other project aspects are haphazardly mixed together. There isn't a quick way to categorize them effectively into sections. Moreover, they aren't automatically numbered but appear as bullet points. So, who will manage these Acceptance Criteria? Who will track them in a system? They often remain only within the Product Backlog Item (PBI), intertwined with all other requirements like 'server availability,' 'API connection,' or even unrelated items not pertaining to the software's requirements (programming code section). I find it very unfortunate that in Azure Devops, there's no capability for creating sections (I'd like to drag and drop them into sections), no option for numbering; if you wish to implement (store and link) these, you'll need to do it manually in a Notepad-like mode. Now, let's delve more into testing tools: Katalon is a really great tool, but if you look closely, there is NOT a column labeled 'Requirement(s)' or a column named 'Acceptance Criteria.' So, even if you have an administrative system (for ACCREQ) set-up in Azure for describing Acceptance Criteria and Requirements, there is still NO link or column for them in Katalon. This means that when you create an automated test that checks or verifies something, what exactly is that "something"? You're left to decipher it from the testcase title and testcase steps in Katalon. There's no "way back"... If a test fails in the year 2030... you won't be able to see in Katalon which Requirement or Acceptance Criteria it corresponds to. You could manually type it in the Description column, and theoretically, if you had meticulously documented all the Requirements and Acceptance Criteria of the software in a computer system, you could read it there. However, reality is often much more complex. In practice, what happens is that in Sprint 3, an Acceptance Criteria from Sprint 1 might change, and it's then added to the current PBI. Or worse: it's added in the comments of a Story. Or even worse: it's not described at all but simply adjusted in the code and pushed to the Master. In short, there is NO "Accreq administrative management". Based on my practical experience, if a company didn't have good requirement management from the outset, it will never be properly done retroactively. It becomes very difficult to clarify things. Whether something works well or not... that becomes a very tricky situation. The ACCREQ administration? This is how it should look like!! To my opinion, in the Azure DevOps stories, you should be able to find all R1 through R5. Updates are made in both the Requirement management tool and in Azure DevOps (not in the Descriptions of a Story, or what I have seen a lot: even in the 'comments' sections... pfffff....).

To my opinion: in the TEST TOOL, you should also be able to find R1 through "R5.3"!! Linked together. Have you arranged it to THAT level of detail!? If so, I would say: proceed... only then continue software development. Otherwise: ask yourself: WHAT is the tool testing, or even better : what is it checking then? It should look more like this: And the software tool test is doing the administration between them is the glue that holds everything together!! What tool is that? I think it is a BIG cap in the market. [ ] Azure and alike have things to do. [ ] Katalon and alike have things to do. [ ] The ICT community is in need of a Requirements and Acceptance Criteria administration tool that glue's/links/connects everything together! We are in need of such a tool. But that's just my humble opinion. |

Categories :

All

120 unieke bezoekers per week.

Uw banner ook hier? Dat kan voor weinig. Tweet naar @testensoftware AuthorMotto: Archives

March 2024

This website uses marketing and tracking technologies. Opting out of this will opt you out of all cookies, except for those needed to run the website. Note that some products may not work as well without tracking cookies. Opt Out of Cookies |