|

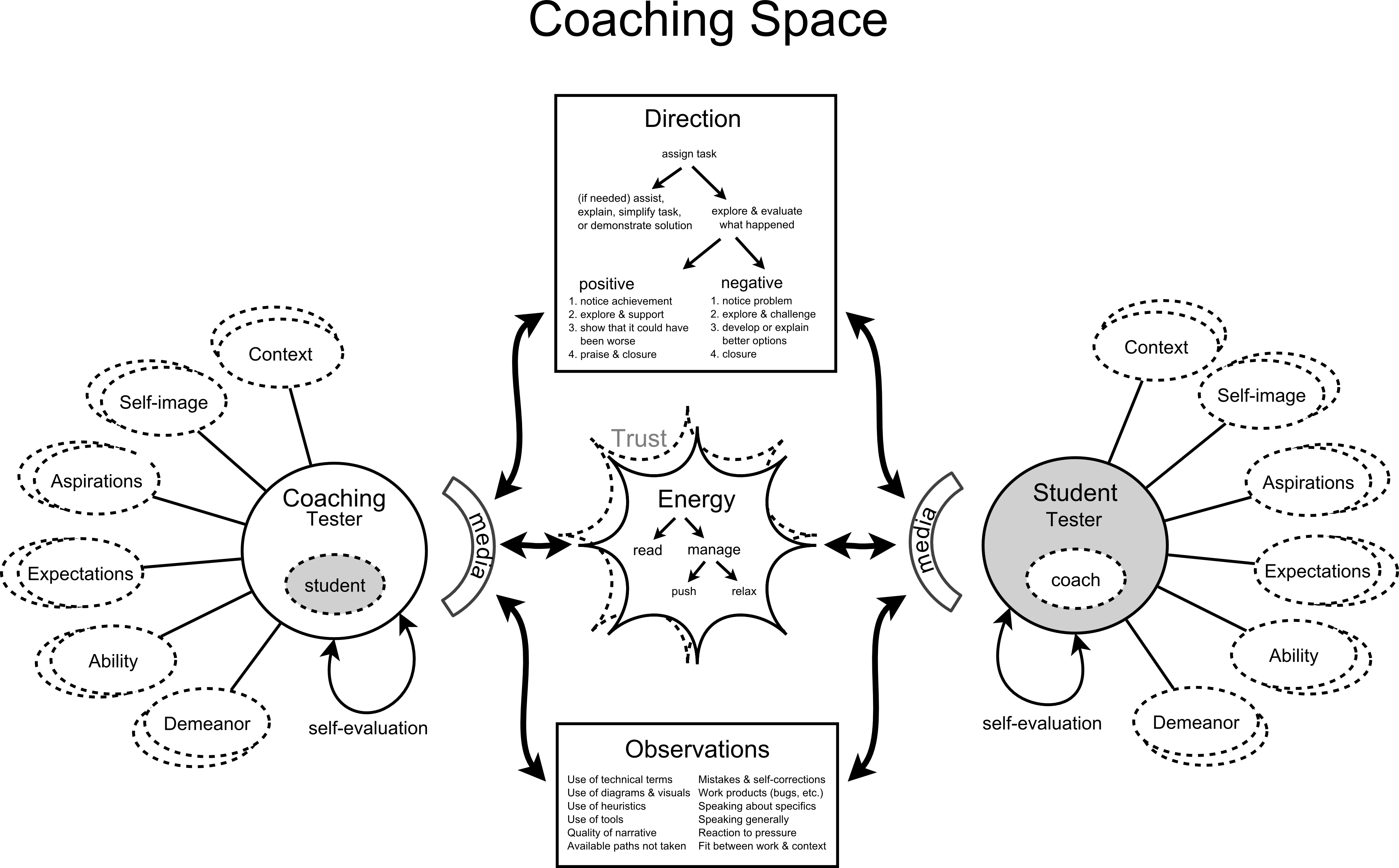

October 2018 and the test community is far from done with talking about Artificial Intelligence and software testing. Moreover there seems to be a trend in the amount of discussion about it on Twitter and other communication channels. TestNet was crowded. To my opinion it was a great success. Yesterday I read a most interesting article called 'Free Online Coaching on software testing'. I especially liked the picture... https://mavericktester.com/2018/02/12/2018-2-12-online-coaching-on-software-testing/ I liked the picture so much because there is a strong truth in how we testers should work and learn from eachother. But if we apply this vision on Artificial Intelligence... then the question raises… should an Ai be able to learn from another Ai...? People need other people. So does that mean that Ai does need another Ai as well? And what about the other way around? Can a human learn from AI? ,Can one Ai coach another Ai? Can an Ai have a "personality"? or should it have? What if...? Ai says: good idea. human says: bad idea Ai says: bad idea. human says: good idea Ai says: good idea. human says: good idea Ai says: bad idea. human says: bad idea Will it always stay possible to determine how an Ai came up with a certain answer, or... just like with humans… the result is a chemical process in the brains which can hardly be explained to another person like in the song. "counting crows - accidentally in love". https://www.youtube.com/watch?v=2yN4-E81wdc I think we need to get start thinking about when the weights should be more on the Ai side and when on the human side... What does all this have to do with the title of the blog? Well... since Artificial Intelligence is a hot topic in the software test community... What is it then exactly that would make someone an excellent Ai tester? For example: would he(/she) understand what type (think Eneagram) of Ai he(/she) is communicating with? Or is that just a strange thought too? https://www.youtube.com/watch?v=1JG2CIYd4TI vs... The last thing I want to mention is that besides the exciting part, Artificial Intelligence. I notice a more disturbing effect. That is in the corner of the amount of devices that is connected to other devices. What's so scary about that? The scary part of that, is that the world to my opinion is not to the well needed level of understanding how software testing works, let alone.. know how Ai testing should be done... Which could result in unpredictable effects. (For example: companies of certain countries hacking other companies on the other side of the world samelessly… (just because it's childsplay for them)) In other words: My point is:

There are no (world) rules or laws for using Ai yet… !? (I don't think there's anything (or it is very little) in the law book, or amnesty international notes for that matter) Are we okay with that fact? Or do we (ai and humans) have some (test)work to do? :)

0 Comments

Leave a Reply. |

Categories :

All

120 unieke bezoekers per week.

Uw banner ook hier? Dat kan voor weinig. Tweet naar @testensoftware AuthorMotto: Archives

March 2024

This website uses marketing and tracking technologies. Opting out of this will opt you out of all cookies, except for those needed to run the website. Note that some products may not work as well without tracking cookies. Opt Out of Cookies |